Create Policy and Role

Going to IAM, we will try to create a role which allows an EC2 to write and list CloudWatch Logs. Go to IAM and select Policies. Create a new Policy with these JSON attached.

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"logs:CreateLogGroup",

"logs:CreateLogStream",

"logs:PutLogEvents",

"logs:DescribeLogStreams"

],

"Resource": [

"*"

]

}

]

}

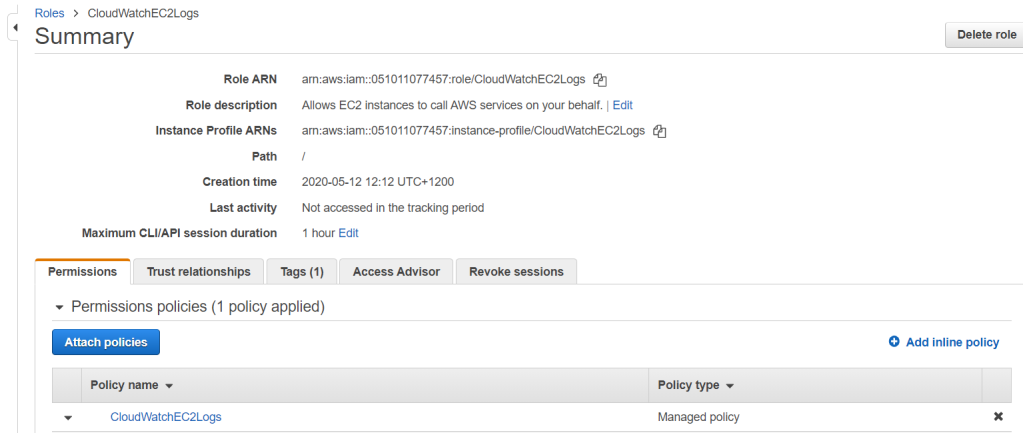

Now we create a role with this policy attached. This role will be used by the Instance to gain access on writing the logs to CloudWatch.

Create Instance and Attach Role

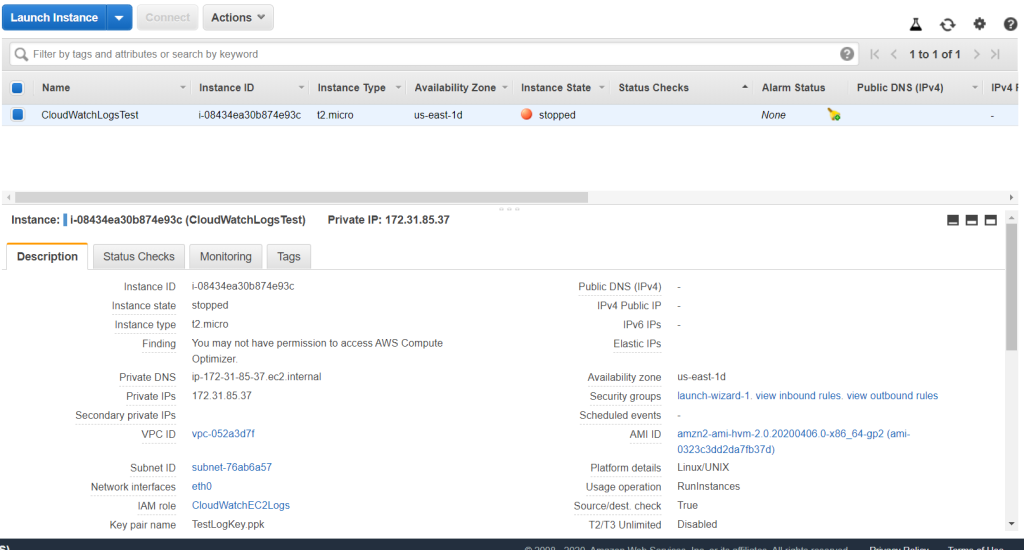

Create a Linux 2 AMI instance with any kinds of configurations you would prefer. For the sake of testing, I created one with a low specification. Every other configuration was left to default, including VPC and security groups. After launch, create a new key pair or use an existing one.

Attach the role to the instance for it to have access to write logs to CloudWatch. Right-click on the instance go to Instance Settings > Attach/Replace IAM Role. Choose the role we just created and Apply.

Set Up CloudWatch Agent

Make sure you have PuTTY to connect to the instance and also the private key pair to gain authentication to the instance. Make sure the instance is running and open PuTTY. Attach the private key pair in PuTTY under SSH > Auth. Copy the instance Public DNS (IPv4) to the hostname in PuTTY and make sure it is an SSH connection. Click Open to connect. If there is a warning pop up from PuTTY, click Yes to trust the host.

Log in with the appropriate credentials and you are now connected to the EC2 instance.

Before starting anything, update the Amazon Linux to the latest changes with the code.

sudo yum update -yOnce completed, install awslogs package with the code below.

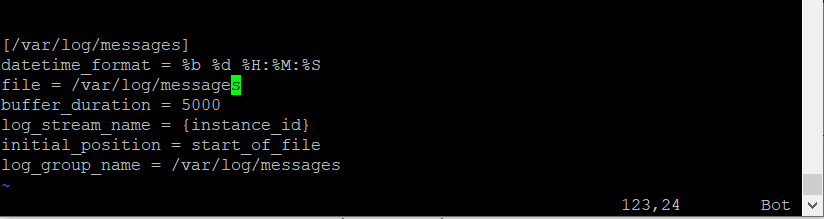

sudo yum install -y awslogsIt should be completed pretty quickly, By default, /var/log/messages are the default information written in awslog.conf. You can find this file under /etc/awslogs/awslogs.conf. We will leave it at that.

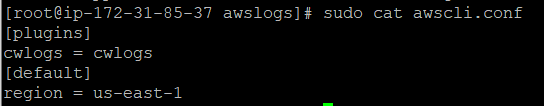

Another thing that you could configure is the region where you would want to put you logs. Access it through /etc/awslogs/awscli.conf.

Now, start the service with the code below and it will start. Remember, this is the code for Linux 2.

sudo systemctl start awslogsdYou can check the error logs from the log file in /var/log/awslogs.log.

To set the awslogs to start every time the system boots run the command below.

sudo systemctl enable awslogsd.serviceThe set up is done and you should be able to see the logs in CloudWatch. Go to Services > CloudWatch. On the left pane, select Log groups and you should see the /var/log/messages group. In it, you should see the logs from the instance.

Critical Thinking

CloudWatch logs can also be leveraged to become a formidable service to secure the infrastructure. Authentication logs is one of them which covers all activity which are accessing the instance. This log can help the administrator to identify suspicious activity such as a breach or a brute force attack. Boot log is another logs which can give insights on unexpected boot failure and downtimes.

Basically each and one of the logs related to the instances pose a critical purpose for monitoring. CloudWatch have the capabilities on monitoring it all and putting in groups to better view the state of instances through logs.

Apart from implementing CloudWatch logs on EC2 instances, CloudWatch is also capable to log other services as well. Lambda functions for example, CloudWatch are capable to track invocations, errors and more. Request activities in S3 is also one of the possible area which CloudWatch logs and metrics can show.

However, with a lot of logs coming from different log groups. Parsing is an important step to process the logs to a digestible statistics. It makes searches easier for analytic purposes. AWS Kinesis would also be a useful service to process the application logs in real-time. Kinesis can process the log streams coming from the application and push it out to CloudWatch for monitoring and alarms implementation.